Understanding and Mitigating "Agent Washing"

Blog post description.

The current technological landscape is experiencing an unprecedented surge in interest surrounding artificial intelligence (AI) agents, recognized for their potential to operate autonomously and execute complex tasks across diverse industries. This rapidly evolving domain presents transformative opportunities for organizations seeking to enhance efficiency and unlock new capabilities. However, amidst this enthusiasm, a concerning phenomenon known as "Agent Washing" has emerged. This practice involves vendors exaggerating the capabilities of their products by rebranding existing technologies, such as traditional AI assistants, Robotic Process Automation (RPA) tools, and chatbots, as sophisticated AI agents, often without substantiating the true capabilities of these claimed agents.

A fundamental distinction separates genuine AI agents from "agent-washed" offerings. Authentic AI agents possess core attributes such as true autonomy, continuous learning, adaptability, and the ability to create measurable value. In contrast, products subjected to "agent washing" typically lack these critical functionalities, often relying on rigid, pre-programmed systems that do not evolve or adapt over time. The primary drivers behind this deceptive practice include a desire to capitalize on the intense AI hype, secure investment, and gain a perceived competitive advantage in a burgeoning market.

The implications for businesses are significant, encompassing risks such as misguided investments in ineffective solutions, missed opportunities to leverage true AI capabilities, operational stagnation, vendor lock-in, and a broader erosion of trust in the AI industry. This practice is not isolated; it represents a specific manifestation of the broader "AI washing" trend, drawing parallels with other deceptive marketing tactics like "greenwashing" and "ethics washing," all of which involve signaling commitment to a desirable attribute without genuine underlying practice.

The emergence of "agent washing" is a direct consequence of the AI hype cycle, which creates a fertile ground for deceptive practices. Periods of intense technological excitement, particularly when definitions and standards are still fluid, inherently invite exaggerated claims and misleading marketing. This dynamic underscores the critical importance of robust due diligence. The prevalence of "agent washing" elevates due diligence from a mere best practice to a critical strategic imperative for businesses. Without the ability to discern genuine AI agents from "washed" products, organizations risk not only financial losses but also being outpaced by competitors who successfully adopt and leverage authentic AI agent capabilities.

To mitigate these risks, organizations must adopt rigorous evaluation frameworks, cultivate robust internal expertise, and remain vigilant against misleading claims. This proactive stance is particularly crucial given the increasing regulatory scrutiny and enforcement actions by bodies such as the U.S. Securities and Exchange Commission (SEC) and the Department of Justice (DOJ).

The Rise of AI Agents and the Challenge of Hype

The current technological landscape is characterized by a significant and accelerating interest in artificial intelligence (AI) agents. These advanced software entities are designed to operate autonomously, executing complex tasks and making decisions with minimal human intervention across a multitude of industries. The potential for AI agents to revolutionize business operations, drive unprecedented efficiencies, and unlock new strategic capabilities is widely recognized, fueling considerable excitement and investment. This burgeoning field promises transformative opportunities for organizations willing to embrace its innovations.

However, the rapid pace of this technological evolution, while offering immense promise, has also inadvertently created an environment ripe for market confusion and misrepresentation. The intense enthusiasm surrounding AI agents has given rise to a phenomenon known as "Agent Washing". This practice involves vendors exploiting the prevailing hype by misrepresenting the capabilities of their products, often by simply relabeling existing, less sophisticated technologies as cutting-edge AI agents.

The swift conceptual development of "AI agents" further contributes to this challenge. As the field is relatively new, a universally solidified and widely understood definition of what constitutes a true AI agent is still being established. This definitional ambiguity creates a vacuum that unscrupulous vendors can readily fill with misleading or exaggerated claims, making it difficult for buyers to differentiate genuine innovation from mere marketing rhetoric. The absence of mature, widely adopted standards allows opportunistic vendors to rebrand products, such as AI assistants, Robotic Process Automation (RPA) tools, and chatbots, to capture buyer attention without possessing substantial agentic capabilities.

This dynamic presents a unique challenge for businesses. While the "transformative opportunities" of AI agents create considerable pressure for early adoption, a hasty approach without proper discernment can paradoxically lead to a "first mover disadvantage." Companies that rush to invest in "agent-washed" solutions risk significant financial losses and operational stagnation, missing out on the genuine potential of intelligent automation. Indeed, many current agentic AI projects are described as early-stage experiments or proofs of concept, often driven more by hype than by sound application, leading to a significant percentage of such projects being abandoned within two years. This underscores that the perceived advantage of early market entry can be negated by poor investment decisions, transforming a potential lead into a costly setback if the underlying technology is not genuinely agentic. This report aims to provide clarity on "agent washing," distinguish it from genuine AI, explore its motivations and significant risks, and offer practical guidance for strategic leaders to navigate this complex marketplace responsibly.

Defining "Agent Washing": Deception in the AI Era

"Agent Washing" represents a specific form of deceptive marketing that has become prevalent in the AI sector. It describes a phenomenon where vendors exaggerate the capabilities of their products by rebranding existing technologies—such as traditional AI assistants, Robotic Process Automation (RPA) tools, and chatbots—as sophisticated AI agents, often without verifying the true, advanced capabilities of these claimed agents. This practice essentially involves mislabeling simpler automation tools, which may rely on basic rule-based systems or rudimentary algorithms, with a "fancy new label" to create an illusion of advanced AI capabilities and capture market attention.

The fundamental distinction between "agent-washed" products and true AI agents lies in their core operational characteristics. Products subjected to "agent washing" typically lack autonomous decision-making, continuous learning, adaptability to complex environments, and the ability to create measurable value beyond their initial design. Their performance often remains static, struggling to improve over time, which sharply contrasts with the dynamic and evolving nature of genuine AI agents.

It is crucial to understand what is often misrepresented or "agent-washed" and what does not constitute a true AI agent:

Large Language Models (LLMs): While powerful components, LLMs are models themselves, not autonomous AI agents. They can be integrated into an agent, but a standalone LLM does not fulfill the definition of an AI agent.

Simple Instructions or Subroutines: A task performed by a specific set of instructions or a subroutine, lacking broader perception and decision-making, is not an AI agent.

Basic Automation Software/Procedures: Generic functionality within automation software or routine processes do not qualify as AI agents.

Robotic Process Automation (RPA) Workflows: Despite frequent rebranding, automated processes from RPA workflows are explicitly noted as not being AI agents.

Conversational Assistants/Chatbots: Any type of assistant, including conversational assistants and chatbots, while useful, generally do not possess the full autonomous capabilities of a true AI agent, though they are commonly "agent-washed".

AI Sales Assistants (as mere tools): Even if built with AI, an AI sales assistant that primarily supports human representatives without significant autonomous capability beyond tool functionality is often considered "agent-washed" if marketed as a full agent.

A critical underlying issue exploited in "agent washing" is the deliberate conflation of AI tools with fully autonomous AI agents. The mere presence of any AI component or automation capability is leveraged to market a product as a complete, sophisticated, and autonomous agent, blurring the crucial distinction between a foundational component and a fully realized, intelligent system. This deliberate semantic blurring is a key characteristic of the deceptive practice.

Furthermore, a fundamental difference between "agent-washed" products and true AI agents often boils down to their operational paradigm: whether they are rigid, rule-based systems or genuinely adaptive and continuously learning entities. Real AI solutions offer adaptability and intelligence that extends beyond predefined rules, enabling intelligent decision-making based on complex contexts and real-time data. In contrast, "agent-washed" technology typically relies on rigid, pre-programmed sequences that fail to adapt to changing circumstances or learn from interactions. This technical distinction between a static, rule-based system and a dynamic, learning one is a crucial differentiator, and vendors engaged in "washing" actively obscure this reality.

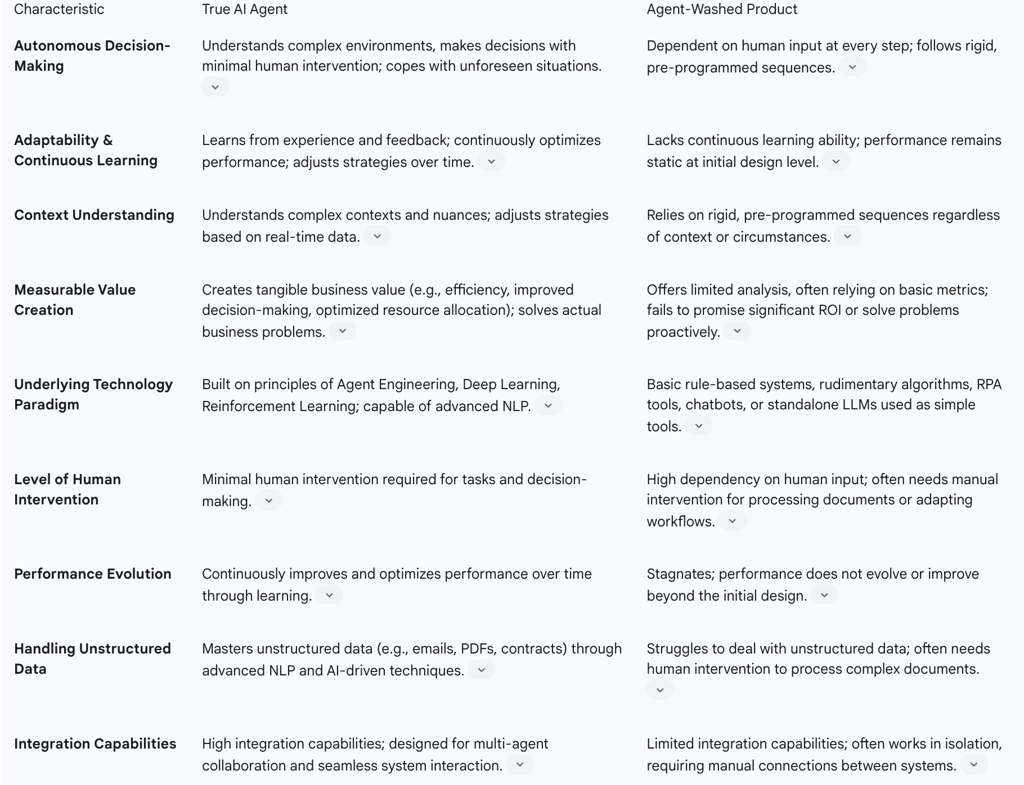

To provide further clarity, the following table outlines the key distinctions between a true AI agent and an "agent-washed" product:

Table: Key Distinctions: True AI Agent vs. Agent-Washed Product

The Anatomy of a True AI Agent: Core Capabilities and Characteristics

A true AI agent is a sophisticated software entity that leverages artificial intelligence not merely to process information, but to perceive its environment, make informed decisions, take purposeful actions, and autonomously or semi-autonomously pursue a given goal within a digital or physical setting. To be genuinely agentic, an application must demonstrate the capacity to complete complex tasks and engage in long-term, goal-oriented planning with minimal human intervention. This distinguishes it from simpler automation tools or standalone AI components.

Several key capabilities define a genuine AI agent:

Autonomous Decision-Making Ability: A hallmark of a true AI agent is its capacity to understand complex environments, make independent decisions, and perform tasks with minimal human oversight. This capability extends beyond merely executing scheduled tasks to effectively coping with unforeseen situations and adapting its actions based on real-time understanding. True agents do not passively receive orders; they comprehend their surroundings and act accordingly to achieve their objectives.

Adaptability and Continuous Learning: Genuine AI agents possess the profound ability to learn from experience and feedback, continuously optimizing their performance over time. This learning is not confined to basic pattern recognition but encompasses understanding context, adjusting strategies, and refining decision-making processes. This often involves advanced techniques such as Reinforcement Learning, allowing the agent to improve its efficacy through iterative interaction with its environment.

Natural Language Understanding and Communication: A mature AI agent should be able to comprehend natural language instructions and communicate effectively, both with humans and with other systems. This includes advanced Natural Language Processing (NLP) capabilities, enabling the agent to draw meaningful insights from complex, unstructured data formats such as emails, PDFs, and contracts, thereby automating tasks like summarizing reports or extracting key information.

Measurable Value Creation: Beyond technological sophistication, a true AI agent must demonstrate its ability to create tangible, measurable value for the enterprise. This includes improving efficiency, enhancing decision-making processes, or optimizing resource allocation. The ultimate success of an intelligent agent product is determined by its capacity to solve actual business problems and deliver concrete benefits to users, not merely by its advanced conceptual marketing. This emphasis on demonstrable value creation serves as a critical litmus test, shifting the focus from technological novelty to tangible return on investment as the primary metric of success.

Multi-Agent Collaboration: As AI technology continues to advance, a significant trend is the development of multi-agent collaboration. A true AI agent should possess the capability to work effectively and intelligently with other agents to accomplish complex tasks, indicating a shift towards more integrated and distributed intelligent systems that can collectively achieve broader organizational goals.

Robustness and Reliability: Genuine AI agents are expected to perform consistently across diverse scenarios, effectively recover from errors, handle edge cases gracefully, and exhibit resilience against adversarial attacks. Their reliability is also assessed by their consistent performance over time, the reproducibility of their results, and their adaptability to unexpected inputs, all of which foster trust in their sustained capability.

Efficiency and Scalability: True agents are designed to efficiently utilize resources such as time and computing power. They also possess the scalability to handle growing amounts of work and operate effectively in larger, more complex environments without significant degradation in performance.

The comprehensive definition of a true AI agent signifies a crucial evolutionary step beyond standalone Large Language Models (LLMs). While LLMs are powerful components, they are merely models, not autonomous AI agents themselves. True agents are capable of taking action, moving beyond simply processing information and generating content, as exemplified by tools like ChatGPT. This understanding reveals that while LLMs serve as foundational tools for understanding and generation, they require additional layers of intelligence—such as planning modules, action execution capabilities, and continuous learning loops—to form a truly autonomous, goal-pursuing agent. This distinction is vital for comprehending the architectural shift in AI and discerning genuine agentic capabilities.

The Risks and Consequences of "Agent Washing"

The prevalence of "agent washing" carries significant risks and consequences for businesses, extending beyond mere financial implications to impact operational efficacy, market integrity, and trust in the broader AI ecosystem.

One of the most immediate and tangible risks is misguided investments and missed opportunities. Companies that fall prey to "agent washing" risk getting "locked into solutions that fail to promise any measure of ROI," leading to inflexible workflows and limited scalability. This means they miss out on the genuine potential of intelligent automation to proactively solve problems and continuously learn from interactions. The impact of such misapplication is substantial, with Gartner predicting that a considerable 40% of agentic AI projects will be abandoned within two years due to being driven by hype rather than genuine capability.

This often translates into operational stagnation. Instead of achieving transformative change, businesses may find themselves with "agent-washed" technology that follows linear, one-size-fits-all engagement processes and fails to improve over time. Such systems cannot adapt to changing circumstances, leaving organizations unable to leverage the true dynamism and problem-solving capabilities of genuine AI agents.

Furthermore, "agent washing" contributes to a broader erosion of trust and market integrity. As a subset of "AI washing," this deceptive marketing practice raises serious concerns regarding transparency, compliance with security regulations, and overall consumer and investor confidence in the AI industry. Such misrepresentations can ultimately hamper legitimate advancements in AI by fostering widespread skepticism. If consumers or businesses discover they have been misled by exaggerated claims, it can lead to a systemic erosion of confidence, potentially impacting the entire sector and making it harder for even ethical AI companies to gain market acceptance. This creates a "trust deficit" across the AI industry, impeding the adoption of legitimate, beneficial AI technologies.

There are also significant security and governance concerns. The increased integration of AI agents, even those that are "washed," introduces greater exposure to security risks and complex governance challenges. Moreover, many AI systems, particularly those lacking true explainability, can operate as "black boxes" where their decision-making processes are opaque and difficult to scrutinize. This lack of transparency can erode trust, hinder accountability, and prevent meaningful interventions when issues arise. This opacity exacerbates the challenge of assigning responsibility when malfunctions or unintended consequences occur, creating a significant "accountability gap" that poses legal, ethical, and operational risks. The complexity of AI systems, combined with the deliberate obfuscation inherent in "agent washing," makes it exceedingly difficult to pinpoint fault, rectify issues, or ensure compliance.

Vendor lock-in and interoperability challenges are another consequence. "Agent-washed" technology often operates in isolation with limited integration capabilities, necessitating manual connections between disparate systems. This can significantly slow flexibility, impede innovation, and lead to undesirable dependence on a single vendor's ecosystem. Finally, cost overruns are a common outcome, as unclear usage controls and variable pricing models associated with misrepresented AI agent solutions can result in unexpected and significant financial burdens for businesses. Companies that either engage in "agent washing" or become victims of it by investing in fraudulent solutions also risk substantial reputational harm, which can be difficult and costly to repair.

Motivations Behind the Deception

The proliferation of "agent washing" is driven by a confluence of powerful market forces and strategic objectives, primarily centered on capitalizing on the burgeoning interest in AI.

A primary motivation is to capitalize on the intense AI hype that currently surrounds artificial intelligence, particularly autonomous agents. Vendors leverage this market enthusiasm to "capture buyers' attention" by labeling their products with the latest buzzwords, even if the underlying technology does not genuinely warrant such claims. This phenomenon bears a striking resemblance to the "dot-com bubble" era, where businesses would append "dot-com" to their names to artificially inflate their valuations and attract investment. The prevailing atmosphere of excitement and rapid innovation creates a fertile ground for such exaggerated claims.

This leads directly to another significant motivation: attracting investment and boosting valuations. Companies frequently engage in "AI washing," and by extension "agent washing," as a strategic ploy to secure funding and attract investment. There is a strong perception that an "AI-powered" label will make their offerings more appealing to venture capitalists and other investors. The case of Albert Saniger, founder of Nate, serves as a stark illustration. Saniger was charged by both the SEC and DOJ for allegedly making false and misleading statements to investors about Nate's purported AI technology, which was in fact manually processed by overseas workers. This example highlights how the promise of cutting-edge AI can be used to defraud investors and attract capital under false pretenses.

Market competition also plays a crucial role. In a highly competitive and rapidly evolving technological landscape, companies may feel immense pressure to appear innovative and keep pace with market trends. This can lead them to resort to "washing" tactics in response to competitors who might genuinely be integrating advanced AI into their offerings. This competitive pressure, combined with a "Fear of Missing Out" (FOMO) among businesses and investors, creates a powerful incentive for unscrupulous vendors to make exaggerated claims, preying on the market's anxiety about being left behind in the AI revolution.

Fundamentally, "agent washing" is employed as a deceptive marketing tactic designed to mislead customers, inflate product perceptions, and ultimately boost sales and market share. This strategic misrepresentation is a calculated effort to gain a commercial advantage.

While not a direct motivation for committing deception, a lack of internal capability within buying organizations to accurately evaluate and apply AI agent technologies can inadvertently create an environment where vendor propaganda and "washing" tactics are more likely to succeed. This susceptibility can further incentivize unscrupulous vendors, as they face less informed scrutiny from potential clients.

The rapid pace of technological innovation, particularly in AI, often outstrips the speed at which regulatory frameworks can be developed and implemented. This creates a temporary "regulatory lag" or vacuum that some companies strategically exploit for deceptive practices before robust enforcement mechanisms are fully established. While regulatory bodies like the SEC and DOJ are now taking action, their approach often involves applying existing frameworks to new technologies, suggesting a period where such practices could proliferate with less immediate oversight. This implies that deceptive actors capitalize on the window of opportunity before the regulatory environment fully matures and becomes more prescriptive and proactive in addressing AI-specific misrepresentations.

"Agent Washing" in Broader Context: A Pattern of Deceptive Marketing

"Agent washing" is not an isolated phenomenon but rather a specific, evolving manifestation of a broader pattern of deceptive marketing practices that have emerged across various industries. It is a direct subset of the larger "AI washing" phenomenon , which broadly refers to the deceptive marketing tactic of overstating the role and integration of artificial intelligence within a product or service. This includes claiming AI involvement without actual use or misusing buzzwords like "smart" or "AI-powered" when the product lacks genuine AI capabilities.

The concept of "AI washing," and by extension "agent washing," is explicitly derived from and analogous to "greenwashing". "Greenwashing" is the practice where companies mislead consumers into believing that they or their products are sustainable or environmentally friendly through false or misleading claims. It often involves "whitewashing" a company's image or hiding unsustainable actions under a "green façade" to capitalize on growing environmental awareness without genuine commitment to environmental care. Both "AI washing" and "greenwashing" exploit heightened consumer awareness or market trends – AI hype versus environmental consciousness – and frequently employ vague or misleading labels, emphasize minor improvements as major impacts, and ultimately undermine trust in legitimate efforts within their respective domains.

Another parallel practice is "ethics washing." This is defined as feigning ethical consideration to improve how a person or organization is perceived, often seen in ostensible diversity, equity, and inclusion (DEI) or environmental, social, and governance (ESG) initiatives. In the context of AI, ethics washing involves creating a "superficially reassuring but illusory sense that ethical issues are being adequately addressed, to justify pressing forward with systems that end up deepening current patterns". Similar to "agent washing," ethics washing involves signaling a commitment to a desirable attribute (ethics) without genuinely having or sufficiently putting that commitment into practice. It can function as a marketing instrument to prevent or mitigate reputational harm or to forestall government regulation, often manifesting through "symbolic" efforts, such as establishing ethics offices that lack real power or influence within the organizational structure.

The unifying theme across "agent washing," "AI washing," "greenwashing," and "ethics washing" is a recurring business model where companies prioritize "virtue signaling"—appearing innovative, ethical, or sustainable—over substantive investment and genuine capability. This strategy leverages public perception and market trends for financial gain. In an increasingly reputation-driven and socially conscious market, the perception of aligning with desirable values has become a marketable commodity, often prioritized over the actual, costly, and difficult practice of genuine implementation. This pattern represents a broader landscape of "deceptive pretenses" in corporate communication.

This landscape of deception is also characterized by its evolution. As public and regulatory awareness grows around one form of "washing" (e.g., greenwashing), deceptive practices tend to evolve and migrate to new, less scrutinized domains (e.g., AI washing, and subsequently "agent washing"). This indicates a continuous dynamic between regulators and informed consumers on one side, and unscrupulous vendors seeking to exploit new areas of hype on the other. This implies that as older forms of "washing" become too well-known or heavily scrutinized, new buzzwords and technological frontiers become the next target for similar deceptive practices, necessitating continuous vigilance and adaptive responses from the market and regulatory bodies.

Safeguarding Against "Agent Washing": A Strategic Guide for Organizations

To effectively navigate the AI agent marketplace and mitigate the risks associated with "agent washing," organizations must adopt a strategic and comprehensive approach. This involves establishing clear internal definitions, conducting rigorous evaluations, fostering internal expertise, and implementing AI agents strategically.

7.1 Establishing Internal Clarity and Consensus

A foundational step for any organization is to establish a clear, universally understood definition of AI agents and their specific capabilities within their own enterprise. This involves creating a comprehensive list of potential high-value use cases to guide both IT and business units in the development, evaluation, and deployment of genuine agents. By fostering this internal clarity and building a robust internal knowledge base, companies can significantly reduce their dependence on external vendor propaganda and empower themselves to make more independent and discerning decisions regarding AI investments. This internal capacity allows for a more informed assessment of vendor claims, transforming a defensive measure into a strategic competitive capability.

7.2 Rigorous Vendor Evaluation and Scrutiny

Organizations must adopt an exhaustive and critical approach to evaluating vendors' products, moving beyond marketing rhetoric to demand tangible evidence of capabilities.

Request Detailed Demonstrations and Deployment References: It is imperative to demand detailed demonstrations of the AI agent's capabilities in action. Specifically, organizations should request deployment references related to enterprise business application scenarios that are similar to their target use case, allowing for a realistic assessment of the agent's performance in a relevant context.

Scrutinize Vendor Claims and Seek Hard Evidence: All vendor announcements and marketing materials must be carefully reviewed. Crucially, organizations should request hard evidence and scientific proof to substantiate any performance claims regarding the usage of AI tools.

Collaborate with Technical Teams: Close collaboration with internal technical teams is essential to analyze the underlying AI technology. Critical questions should be posed regarding how the AI system learns (e.g., supervised, unsupervised, reinforcement learning), the type of data it learns from, the specific adaptations it can make, and how it handles unexpected or out-of-distribution data. It is vital to verify that the product does not primarily rely on simple statistical analyses, predetermined rules, or basic automation lacking authentic learning and adaptive capabilities.

Focus on Business Value Over Conceptual Marketing: When evaluating AI agent products, enterprises should prioritize whether the solution can genuinely solve actual business problems and create measurable value, rather than being swayed solely by conceptual marketing or advanced technological descriptions. The ultimate success of an AI agent is its ability to deliver tangible ROI.

7.3 Developing Internal Expertise and Skill Development

Strengthening internal talent training and knowledge accumulation is paramount. This involves investing in new AI-specific development practices and fostering cross-team scalable skills. Building an internal AI agent knowledge base is crucial to reduce dependence on vendor propaganda and empower internal teams to make more informed and independent decisions. Organizations with strong internal AI literacy are better positioned not only to defend against deceptive practices but also to identify and leverage true AI agent capabilities for strategic gain, transforming a defensive measure into a powerful competitive capability.

7.4 Strategic Implementation and Continuous Monitoring

A cautious and strategic approach to implementation is advised. Starting with low-risk pilot projects is a crucial de-risking mechanism. This allows organizations to reduce uncertainty and demonstrate concrete business value in a controlled environment before committing to significant investments and widespread implementation. This iterative approach enables learning and adaptation without exposing the organization to large-scale, potentially costly failures.

Application development leaders should continuously monitor the evolving landscape of AI agents and gain a deeper understanding of where different products fit within the spectrum of agent capabilities. To extract real value, organizations must focus on designing AI-agent-focused workflows from the ground up, entirely rethinking processes to leverage the agents effectively for enterprise productivity, rather than merely augmenting individual tasks.

7.5 Utilizing Comprehensive Evaluation Frameworks

Employing structured metrics and agent-specific diagnostics that look beyond final outputs is vital to assess what the agent knows, what actions it takes, and how it plans.

Key Performance Aspects: Evaluate accuracy (how often it produces correct output or makes the right decision), effectiveness (how well it achieves goals), efficiency (resource utilization, task completion times), scalability (ability to handle growing workloads), robustness (performance in diverse scenarios, error recovery, handling edge cases), and reliability (consistency over time, reproducibility).

LLMs as Judges: Leverage Large Language Models (LLMs) as "judges" to automate and enhance the evaluation process by comparing the AI agent's responses to predefined answers or human-generated responses.

Established Frameworks: Consider established frameworks and tools like TensorFlow Extended (TFX) for model evaluation, Fairlearn for assessing fairness, and MLflow for tracking experiments.

Internal Path Evaluation: Crucially, evaluate the internal "path" the agent takes during execution, checking for repetitions, loops, or unnecessary steps that can indicate bugs or inefficiencies. This includes assessing the router's accuracy in choosing the right skill and extracting parameters.

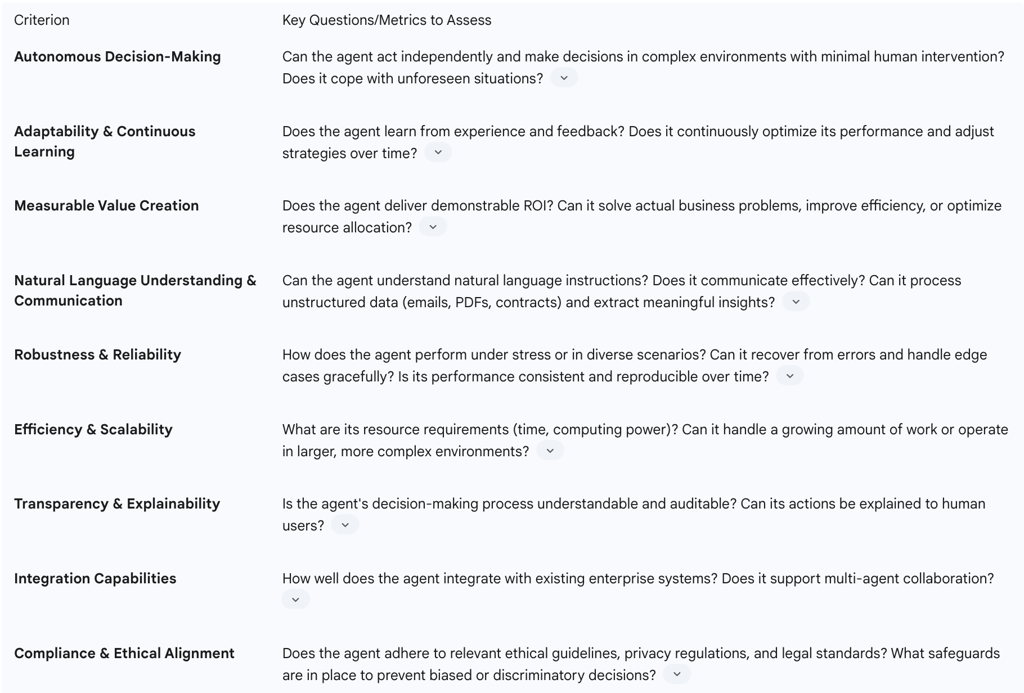

The following table provides a comprehensive checklist for evaluating AI agent products:

Table: Key Evaluation Criteria for AI Agents

Regulatory Landscape and Enforcement Actions

The increasing prevalence of "AI washing," including "agent washing," has attracted significant attention from regulatory authorities in the United States. Both the Securities and Exchange Commission (SEC) and the Department of Justice (DOJ) have expressed growing concern regarding misleading statements about AI capabilities and are warming up to enforcement actions in this domain.

Regulatory bodies are sending a strong message that any claims related to AI must be accurate, truthful, and backed by verifiable evidence. Rather than developing entirely new regulatory frameworks, the SEC is applying "classic enforcement frameworks – prohibiting false or misleading statements – to new technologies, systems and their associated arrangements and prospects". This indicates that existing securities laws and consumer protection regulations are deemed sufficient to address misrepresentations in the AI space.

Several enforcement actions underscore this heightened scrutiny:

In March 2024, the SEC imposed its first civil penalties on two companies for making misleading statements about their use of AI.

In April 2024, the SEC charged two investment advisory firms for misrepresenting the role of AI in their investment decision-making processes.

In July 2024, the SEC charged a corporate executive from a supposed AI hiring startup with fraud for using AI buzzwords without underlying substance.

A notable case involves Albert Saniger, founder of Nate, against whom parallel actions were filed by the SEC and DOJ in April 2025. Saniger was allegedly charged with making false and misleading statements to investors about Nate's purported AI technology, which was in fact manually processed by overseas contract workers. The SEC seeks disgorgement, civil penalties, and a permanent injunction, while the DOJ alleges securities and wire fraud, carrying potential prison sentences. This case highlights the severity of potential consequences for deceptive AI claims.

The increasing regulatory scrutiny and enforcement actions signify a fundamental shift in the burden of proof for AI claims, placing it squarely onto companies. This necessitates a proactive, technically informed, and robust compliance strategy that goes beyond mere marketing claims to verifiable evidence and transparent internal processes. Companies must have verifiable, documented evidence and internal governance structures to support their AI assertions, transforming what might have been perceived as a marketing function into a critical compliance and risk management responsibility.

Furthermore, the SEC's approach to individual liability and the explicit charges brought by the DOJ against Albert Saniger establish a clear and significant precedent: executives can be held personally responsible, potentially facing severe penalties including prison time, for misleading AI claims. The SEC has indicated it will approach individual liability in AI cases similarly to how it handles cybersecurity disclosure failures, examining whether individuals "knew or should have known about misrepresentations" and what actions they took or failed to take to prevent misleading disclosures. This dramatically elevates the stakes for corporate governance and oversight of AI disclosures, likely driving a much more cautious, thorough, and truthful approach to AI claims at the executive and board levels.

Companies are expected to be transparent and clearer in communicating the actual usage of AI in their products or services. Vague or exaggerated claims, such as simply labeling products as "AI-powered," are likely to trigger increased scrutiny and require detailed substantiation regarding the tools, training models, and data used. Effective governance requires a deep understanding of the technology, with legal teams and high-level management involved in reviewing claims and implementing robust internal control frameworks. Organizations must also exercise due diligence when engaging with third-party vendors whose services impact their AI technology, requiring clear details about their tools, data science expertise, and whether the AI is proprietary or relies on third-party models like LLMs.

Navigating the AI Agent Future Responsibly

The advent of AI agents presents a truly transformative potential for businesses, promising unprecedented levels of efficiency, autonomy, and problem-solving capabilities. However, this promising future is currently obscured by the widespread practice of "agent washing," a deceptive phenomenon driven by market hype and the intense competition to appear innovative. This practice, which misrepresents basic automation as sophisticated AI, poses significant financial, operational, and reputational risks to organizations that fail to exercise proper diligence.

Successfully navigating this complex and evolving landscape demands a strategic and proactive approach. Organizations must prioritize establishing clear, internal definitions of what constitutes a genuine AI agent, thereby reducing reliance on external vendor propaganda and fostering independent decision-making. This internal clarity is foundational to conducting rigorous vendor evaluations, which must move beyond marketing rhetoric to demand tangible evidence, detailed demonstrations, and verifiable performance data. Investing in and cultivating robust internal AI expertise and skill sets is not merely a defensive measure against deception but a strategic imperative that enables informed decision-making and genuine innovation.

The current state of AI agent adoption indicates that the market is still in an early, less mature phase. Many projects are experimental or proofs of concept, driven more by hype than by proven business value. This suggests that businesses should approach AI agent adoption with a mindset of careful validation, starting with low-risk pilot projects to demonstrate concrete business value before committing to significant investments. True value from AI agents will not come from simply adopting "AI-powered" labels, but from a fundamental rethinking of business processes and the strategic integration of genuine agentic capabilities, focusing on enterprise productivity rather than just individual task augmentation.

Finally, beyond legal compliance and risk mitigation, actively combating "agent washing" and promoting genuine AI capabilities is an ethical imperative for the long-term, sustainable growth and societal acceptance of AI technology. Deception undermines the very foundation of trust necessary for widespread AI adoption. The long-term health, public acceptance, and ethical development of the entire AI industry are contingent upon its commitment to truthfulness and integrity. By prioritizing transparency, verifiable claims, and genuine innovation, organizations can not only mitigate their own risks but also contribute to a healthier, more trustworthy AI ecosystem that can truly deliver on its transformative promise without succumbing to a backlash fueled by widespread disillusionment.

FAQ

What is "Agent Washing" in the context of AI, and why is it a concern?

"Agent Washing" is a deceptive marketing practice where vendors exaggerate the capabilities of their products by rebranding existing, often simpler, technologies like traditional AI assistants, Robotic Process Automation (RPA) tools, and chatbots as sophisticated AI agents. The primary concern arises because these "agent-washed" products typically lack the core attributes of genuine AI agents, such as true autonomy, continuous learning, adaptability to complex environments, and the ability to create measurable value beyond their initial design. This practice is driven by the desire to capitalise on the intense AI hype, attract investment, gain a competitive advantage, and mislead customers. For businesses, this leads to significant risks like misguided investments, operational stagnation, vendor lock-in, and an erosion of trust in the broader AI industry.

How do true AI agents differ fundamentally from "agent-washed" products?

The fundamental distinctions between true AI agents and "agent-washed" products lie in their core operational characteristics. True AI agents possess:

Autonomous Decision-Making: They understand complex environments and make decisions with minimal human intervention, adapting to unforeseen situations. "Agent-washed" products are dependent on human input and follow rigid, pre-programmed sequences.

Adaptability & Continuous Learning: Genuine agents learn from experience and feedback, continuously optimising performance and adjusting strategies over time, often using advanced techniques like Reinforcement Learning. "Agent-washed" products lack this ability, their performance remaining static.

Context Understanding: True agents comprehend complex contexts and nuances, adjusting strategies based on real-time data. "Agent-washed" solutions rely on pre-programmed sequences regardless of context.

Measurable Value Creation: Authentic agents create tangible business value by solving problems, improving efficiency, or optimising resources. "Agent-washed" offerings provide limited analysis and often fail to deliver significant ROI proactively.

Underlying Technology: True agents are built on principles of Agent Engineering, Deep Learning, and Reinforcement Learning, capable of advanced Natural Language Processing (NLP). "Agent-washed" products often use basic rule-based systems, rudimentary algorithms, RPA tools, or standalone Large Language Models (LLMs) as simple components.

Human Intervention: True agents require minimal human intervention for tasks and decision-making, while "agent-washed" products have a high dependency on human input.

Performance Evolution: Genuine agents continuously improve, whereas "agent-washed" solutions stagnate.

Handling Unstructured Data: True agents master unstructured data through advanced NLP, while "agent-washed" products struggle without human intervention.

Integration Capabilities: True agents are designed for multi-agent collaboration and seamless system interaction, unlike "agent-washed" products which often work in isolation.

What are the main risks for businesses that fall victim to "Agent Washing"?

Businesses falling victim to "Agent Washing" face several significant risks:

Misguided Investments and Missed Opportunities: Companies may invest in ineffective solutions that fail to deliver a return on investment (ROI), missing out on the genuine potential of intelligent automation. This can lead to financial losses and operational stagnation, with many such projects being abandoned within two years.

Operational Stagnation: Instead of achieving transformative change, businesses may find themselves with rigid, "agent-washed" technology that cannot adapt to changing circumstances or learn over time, hindering dynamic problem-solving.

Erosion of Trust and Market Integrity: Deceptive marketing practices like "Agent Washing" can lead to a broader loss of confidence in the AI industry, making it harder for legitimate AI advancements to gain market acceptance.

Security and Governance Concerns: The integration of misrepresented AI systems can increase exposure to security risks and introduce complex governance challenges due to their opaque "black box" nature, hindering accountability.

Vendor Lock-in and Interoperability Challenges: "Agent-washed" technology often has limited integration capabilities, leading to undesirable dependence on a single vendor and slowing flexibility.

Cost Overruns: Unclear usage controls and variable pricing associated with misrepresented AI solutions can result in unexpected and significant financial burdens.

Reputational Harm: Both companies engaging in "Agent Washing" and those that invest in fraudulent solutions risk substantial reputational damage.

How is "Agent Washing" related to broader patterns of deceptive marketing like "Greenwashing" or "Ethics Washing"?

"Agent Washing" is a specific manifestation of a broader pattern of deceptive marketing, specifically a subset of "AI washing." It is analogous to "greenwashing" and "ethics washing" in several ways:

Exploiting Hype/Trends: All these practices exploit heightened public awareness or market trends – AI hype, environmental consciousness, or social responsibility – to gain a perceived advantage.

Misleading Claims: They involve making false, exaggerated, or misleading claims about a product's or company's attributes (e.g., being "AI-powered," "environmentally friendly," or "ethical") without genuine underlying practice.

Virtue Signalling over Substance: Companies prioritise "virtue signalling" – appearing innovative, ethical, or sustainable – over substantive investment and genuine capability. This strategy leverages perception for financial gain.

Erosion of Trust: They all undermine trust in legitimate efforts within their respective domains. If consumers or businesses discover they have been misled, it can lead to widespread skepticism across the entire sector.

Evolution of Deception: As regulatory and public awareness grows around one form of "washing" (e.g., greenwashing), deceptive practices tend to evolve and migrate to new, less scrutinised domains (e.g., AI washing, and subsequently "agent washing").

What practical steps can organisations take to safeguard themselves against "Agent Washing"?

Organisations can safeguard themselves against "Agent Washing" through a strategic and comprehensive approach:

Establish Internal Clarity: Define AI agents and their capabilities clearly within the organisation, creating a robust internal knowledge base to reduce dependence on external vendor claims.

Rigorous Vendor Evaluation: Adopt an exhaustive approach to evaluating vendors. Demand detailed demonstrations, deployment references in relevant scenarios, and hard evidence to substantiate performance claims. Collaborate closely with technical teams to scrutinise the underlying AI technology, focusing on learning mechanisms, adaptability, and handling of unexpected data.

Focus on Business Value: Prioritise whether a solution genuinely solves actual business problems and delivers measurable ROI over conceptual marketing or advanced technological descriptions.

Develop Internal Expertise: Invest in training internal talent and building AI-specific skills to empower teams to make informed and independent decisions, transforming a defensive measure into a competitive capability.

Strategic Implementation: Start with low-risk pilot projects to reduce uncertainty and demonstrate concrete business value in a controlled environment before widespread implementation. Continuously monitor the evolving AI agent landscape.

Utilise Comprehensive Evaluation Frameworks: Employ structured metrics and agent-specific diagnostics to assess accuracy, effectiveness, efficiency, scalability, robustness, reliability, transparency, integration capabilities, and ethical alignment. Evaluate the agent's internal "path" to check for inefficiencies.

What core capabilities define a true AI agent, distinguishing it from simpler AI tools?

A true AI agent is a sophisticated software entity that perceives its environment, makes informed decisions, takes purposeful actions, and autonomously or semi-autonomously pursues a given goal. Key capabilities defining a genuine AI agent include:

Autonomous Decision-Making: Ability to understand complex environments, make independent decisions, and cope with unforeseen situations with minimal human oversight.

Adaptability and Continuous Learning: Profound ability to learn from experience and feedback, continuously optimising performance and adjusting strategies over time (e.g., through Reinforcement Learning).

Natural Language Understanding and Communication: Capacity to comprehend natural language instructions, communicate effectively with humans and other systems, and extract insights from unstructured data through advanced Natural Language Processing (NLP).

Measurable Value Creation: Demonstrates the ability to create tangible, measurable value (e.g., efficiency, improved decision-making, optimised resource allocation) by solving actual business problems.

Multi-Agent Collaboration: Capability to work effectively and intelligently with other agents to accomplish complex tasks, indicating integrated and distributed intelligent systems.

Robustness and Reliability: Performs consistently across diverse scenarios, recovers from errors, handles edge cases gracefully, exhibits resilience, and provides consistent, reproducible results.

Efficiency and Scalability: Designed to efficiently utilise resources and scale to handle growing workloads in complex environments without performance degradation.

What is the regulatory landscape surrounding "AI Washing," and what are the implications for companies and executives?

The regulatory landscape regarding "AI Washing" in the U.S. is intensifying, with both the Securities and Exchange Commission (SEC) and the Department of Justice (DOJ) expressing significant concern and pursuing enforcement actions. Regulatory bodies are applying existing "classic enforcement frameworks," prohibiting false or misleading statements, to new AI technologies.

Implications for companies and executives include:

Increased Scrutiny and Burden of Proof: Companies now bear the burden of proving that their AI claims are accurate, truthful, and backed by verifiable evidence. Vague or exaggerated claims will trigger detailed substantiation.

Civil Penalties and Fraud Charges: The SEC has already imposed civil penalties for misleading AI statements and charged investment advisory firms and executives with fraud.

Individual Liability: Executives can be held personally responsible, potentially facing severe penalties including prison time, for misleading AI claims. The SEC will examine whether individuals "knew or should have known about misrepresentations."

Enhanced Corporate Governance: This necessitates a proactive, technically informed, and robust compliance strategy. Legal teams and high-level management must be involved in reviewing claims and implementing strong internal control frameworks.

Due Diligence on Third-Party Vendors: Organisations must exercise due diligence when engaging with third-party vendors whose services impact their AI technology, requiring clear details about their tools, data science expertise, and reliance on third-party models.

Why is fostering internal AI expertise crucial for organisations, beyond simply mitigating risks?

Fostering internal AI expertise is crucial for organisations not only to mitigate the risks of "Agent Washing" but also as a strategic imperative for several reasons:

Independent Decision-Making: Strong internal knowledge reduces dependence on external vendor propaganda, empowering companies to make more informed and independent decisions regarding AI investments.

Informed Scrutiny: Internal AI literacy enables more effective and critical evaluation of vendor claims, allowing organisations to differentiate genuine innovation from mere marketing rhetoric.

Strategic Competitive Capability: By understanding true AI agent capabilities, organisations can proactively identify and leverage these for strategic gain, transforming a defensive measure into a powerful competitive advantage.

Effective Implementation: Internal expertise is vital for designing AI-agent-focused workflows from the ground up, rethinking processes to effectively leverage agents for enterprise productivity rather than just augmenting individual tasks.

Driving Genuine Innovation: A deep understanding of AI allows organisations to move beyond superficial adoption to truly integrate and develop advanced AI solutions that create tangible business value and drive transformative change.